Summary

Digital services are an integral part of our modern lives, and securely and effectively verifying our identity online is necessary for using and trusting these services. There is at the same time a pressure from malicious actors to exploit weaknesses in the verification mechanisms. An increasingly important vector for exploiting weaknesses is social manipulation, where users are tricked into giving away the information needed to access online services.

Digital services typically offer several ways for a person to verify their identity, usually requiring multiple factors. This can include information about something you know (passwords or PIN codes), something you have (a specific device or a physical key) or something you are (biometrics). While passwords and PIN are widely used, we see a shift towards increased use of biometric data to increase the security of identity verification. Biometric data encompasses unique information about our physical, physiological or behavioural characteristics – most commonly referring to our fingerprints or facial images.

Mobai, with their solution “SALT”, seeks to increase the robustness of digital identity verification by, amongst other things, implementing real-time check of facial images using mobile devices as users verify their identity, as an addition to other security measures.

Due to the uniqueness of biometric data and the potential consequences if it is stolen, it is subject to strict privacy regulations that limits its collection, storage and use.

To address these concerns, Mobai seeks to reduce the risks associated with processing biometric data. They aim to do this by leveraging artificial intelligence and novel machine learning techniques and, by doing so, decrease the privacy risks for the user whilst also expanding how biometric information can be used for digital verification.

In this report, the Norwegian Data Protection Authority will address key legal challenges posed by “SALT”, including whether Mobai’s technology can expand how biometric information can be used for verification purposes.

Our aim is to give valuable and broadly applicable legal insights, which can benefit Mobai and their partners, as well as other actors working in related fields or with similar technology.

Main findings

In this sandbox project we have addressed some key legal challenges related to how the SALT-solution works, that are also applicable to other companies that work in similar fields and with similar legal considerations.

Our legal assessments of these challenges are as follows:

-

Are facial images considered biometric data?

A facial image in itself does not always qualify as biometric data for GDPR purposes. However, we do, consider biometric templates as biometric data. We also consider the processing operations performed on the facial image to generate the biometric template (i.e., the biometric feature extraction), as processing of biometric data under the GDPR.

Although facial images in themselves are not systematically considered biometric data, processing of facial images may be subject to the same level of security requirements as for biometric data. -

Are protected biometric templates personal information subject to the GDPR?

We have discussed with Mobai whether protected biometric templates – that result from processing using homomorphic encryption – can be considered anonymous, or personal information subject to the GDPR. We argue that while homomorphic encryption and protected templates make the information incomprehensible to other parties without the encryption key, this does not necessarily guarantee anonymity. As long as the encryption key can be used to link the template to a real person, we contend that it falls within the definition of personal information and therefore the GDPR applies.

-

Is biometric information used for verification considered to entail the processing of special categories of personal data (article 9)?

There is legal uncertainty regarding whether biometric verification entails a processing of special categories of personal data as covered by Article 9(1). This is not an issue that can be solved in this sandbox project. However, based on our discussions, the Norwegian Data Protection Authority do consider that it is likely that biometric verification is covered by Article 9(1). We would therefore recommend those involved in the SALT-project to treat the biometric data used for verification purposes as a special category of personal data.

-

What is the difference between primary and secondary processing of information in the SALT-solution?

The primary purpose of SALT is to ensure secure verification of identities. We regard certain “pre-processing” operations – which in Mobai’s case relates to the template creation and the comparison of protected templates – as intrinsic to this primary purpose. However, this is only the case when they are strictly necessary in order to achieve this purpose. We consider various processing that are not carried out for the purpose of identity verification, as processing for secondary purposes. This includes purposes related to improvements to the algorithms and the system as such.

In line with this argument, the legal basis for the primary processing does not extend to include processing conducted for these secondary purposes.

-

It is possible to store biometric information on a central server?

Mobai’s approach for storing and processing biometric data requires centralized storage for its enhanced security. While centralized storage offers advanced encryption, access controls, and robust safeguards, it also raises general concerns about the wide scale collection of biometric and other data that can be used for verification of digital identities.

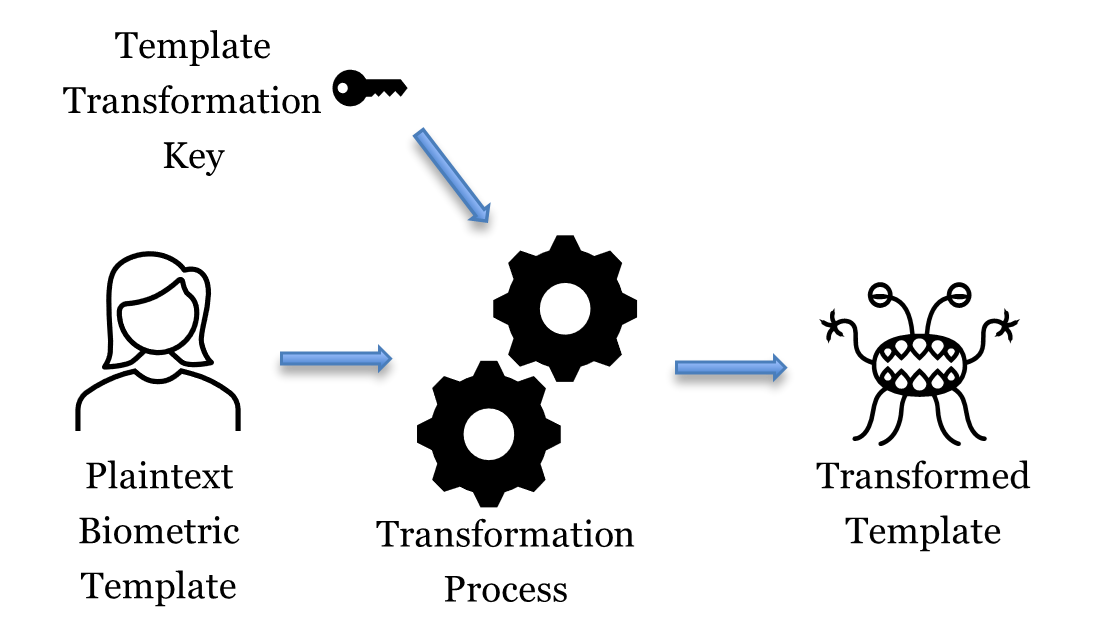

Mobai asserts that by employing innovative technology to generate Protected Biometric Templates (PBTs), it is possible to balance the need for security and privacy by combining the features of decentralized devices with central storage and processing.

In addition, Mobai stores encrypted images centrally for necessary AI model training, while claiming strict data minimization practices, aiming to balance security and compliance in management of sensitive data.

In this project, the Norwegian Data Protection Authority has assessed that Mobai's solution might enable the implementation of central biometric data storage and processing in cases where it was previously not considered secure enough to address the significant concerns associated with central storage.

What is the sandbox?

In the sandbox, participants and the Norwegian Data Protection Authority jointly explore issues relating to the protection of personal data in order to help ensure the service or product in question complies with the regulations and effectively safeguards individuals’ data privacy.

The Norwegian Data Protection Authority offers guidance in dialogue with the participants. The conclusions drawn from the projects do not constitute binding decisions or prior approval. Participants are at liberty to decide whether to follow the advice they are given.

The sandbox is a useful method for exploring issues where there are few legal precedents, and we hope the conclusions and assessments in this report can be of assistance for others addressing similar issues.